HISTORY

The

first computers were people! That is, electronic computers (and the earlier

mechanical computers) were given this name because they performed the work that

had previously been assigned to people. "Computer" was originally a

job title: it was used to describe those human beings (predominantly women)

whose job it was to perform the repetitive calculations required to compute such

things as navigational tables, tide charts, and planetary positions for

astronomical almanacs. Imagine you had a job where hour after hour, day after

day, you were to do nothing but compute multiplications. Boredom would quickly

set in, leading to carelessness, leading to mistakes. And even on your best days

you wouldn't be producing answers very fast. Therefore, inventors have been

searching for hundreds of years for a way to mechanize (that is, find a

mechanism that can perform) this task.

This

picture shows what were known as "counting tables" [photo courtesy

IBM]

A

typical computer operation back when computers were people.

The

abacus was an early aid for mathematical computations. Its only

value is that it aids the memory of the human performing the calculation. A

skilled abacus operator can work on addition and subtraction problems at the

speed of a person equipped with a hand calculator (multiplication and division

are slower). The abacus is often wrongly attributed to China. In fact, the

oldest surviving abacus was used in 300 B.C. by the Babylonians. The abacus is

still in use today, principally in the far east. A modern abacus consists of

rings that slide over rods, but the older one pictured below dates from the time

when pebbles were used for counting (the word "calculus" comes from

the Latin word for pebble).

A

very old abacus

A

more modern abacus. Note how the abacus is really just a representation of the

human fingers: the 5 lower rings on each rod represent the 5 fingers and the 2

upper rings represent the 2 hands.

In

1617 an eccentric (some say mad) Scotsman named John Napier invented logarithms,

which are a technology that allows multiplication to be performed via addition.

The magic ingredient is the logarithm of each operand, which was originally

obtained from a printed table. But Napier also invented an alternative to

tables, where the logarithm values were carved on ivory sticks which are now

called Napier's Bones.

An

original set of Napier's Bones [photo courtesy IBM]

A

more modern set of Napier's Bones

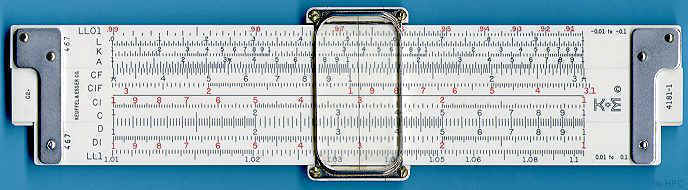

Napier's

invention led directly to the slide rule, first built in England

in 1632 and still in use in the 1960's by the NASA engineers of the Mercury,

Gemini, and Apollo programs which landed men on the moon.

A

slide rule

Leonardo

da Vinci (1452-1519) made drawings of gear-driven calculating machines but

apparently never built any.

A

Leonardo da Vinci drawing showing gears arranged for computing

The

first gear-driven calculating machine to actually be built was probably the calculating

clock, so named by its inventor, the German professor Wilhelm Schickard

in 1623. This device got little publicity because Schickard died soon afterward

in the bubonic plague.

Schickard's

Calculating Clock

In

1642 Blaise Pascal, at age 19, invented the Pascaline as an aid

for his father who was a tax collector. Pascal built 50 of this gear-driven

one-function calculator (it could only add) but couldn't sell many because of

their exorbitant cost and because they really weren't that accurate (at that

time it was not possible to fabricate gears with the required precision). Up

until the present age when car dashboards went digital, the odometer portion of

a car's speedometer used the very same mechanism as the Pascaline to increment

the next wheel after each full revolution of the prior wheel. Pascal was a child

prodigy. At the age of 12, he was discovered doing his version of Euclid's

thirty-second proposition on the kitchen floor. Pascal went on to invent

probability theory, the hydraulic press, and the syringe. Shown below is an 8

digit version of the Pascaline, and two views of a 6 digit version:

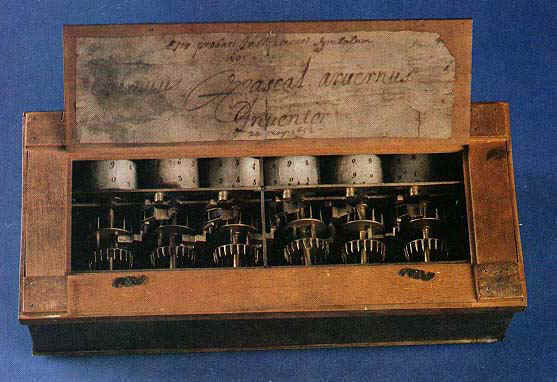

Pascal's

Pascaline [photo © 2002 IEEE]

A

6 digit model for those who couldn't afford the 8 digit model

Just

a few years after Pascal, the German Gottfried Wilhelm Leibniz (co-inventor with

Leibniz's

Stepped Reckoner (have you ever heard "calculating" referred to as

"reckoning"?)

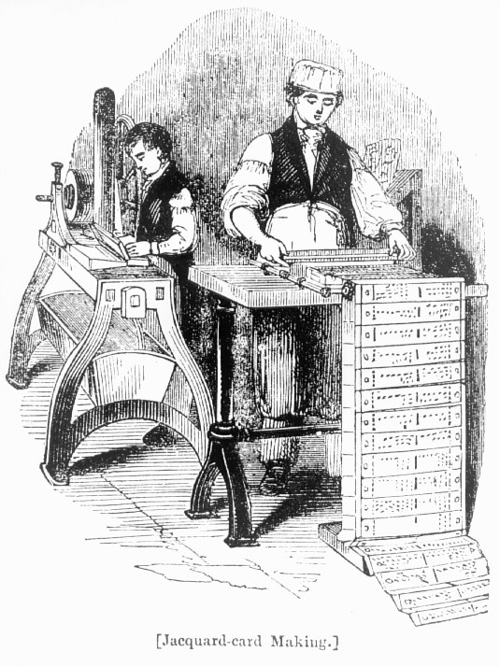

In

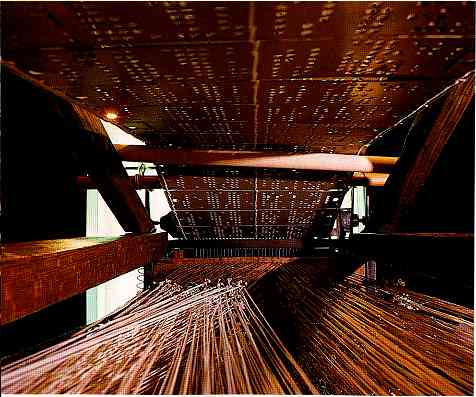

1801 the Frenchman Joseph Marie Jacquard invented a power loom that could base

its weave (and hence the design on the fabric) upon a pattern automatically read

from punched wooden cards, held together in a long row by rope. Descendents of

these punched cards have been in use ever since (remember the

"hanging chad" from the

Jacquard's

Loom showing the threads and the punched cards

By

selecting particular cards for Jacquard's loom you defined the woven pattern

[photo © 2002 IEEE]

A

close-up of a Jacquard card

This

tapestry was woven by a Jacquard loom

Jacquard's

technology was a real boon to mill owners, but put many loom operators out of

work. Angry mobs smashed Jacquard looms and once attacked Jacquard himself.

History is full of examples of labor unrest following technological innovation

yet most studies show that, overall, technology has actually increased the

number of jobs.

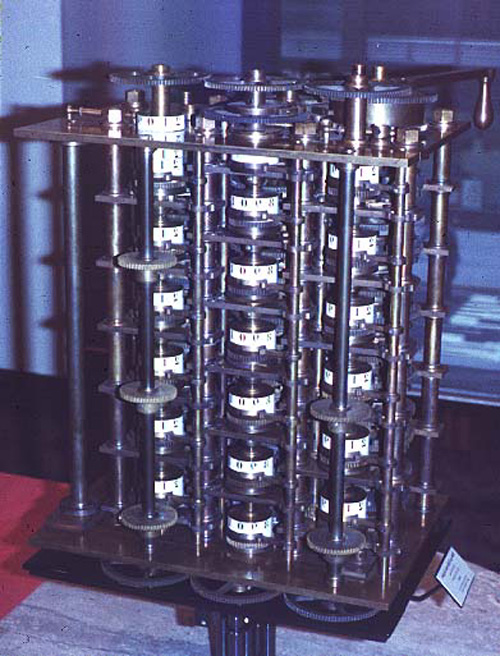

By

1822 the English mathematician Charles Babbage was proposing a

steam driven calculating machine the size of a room, which he called the Difference

Engine. This machine would be able to compute tables of numbers, such as

logarithm tables. He obtained government funding for this project due to the

importance of numeric tables in ocean navigation. By promoting their commercial

and military navies, the British government had managed to become the earth's

greatest empire. But in that time frame the British government was publishing a

seven volume set of navigation tables which came with a companion volume of

corrections which showed that the set had over 1000 numerical errors. It was

hoped that Babbage's machine could eliminate errors in these types of tables.

But construction of Babbage's Difference Engine proved exceedingly difficult and

the project soon became the most expensive government funded project up to that

point in English history. Ten years later the device was still nowhere near

complete, acrimony abounded between all involved, and funding dried up. The

device was never finished.

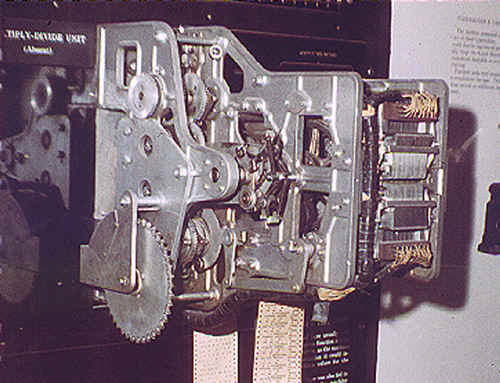

A

small section of the type of mechanism employed in Babbage's Difference Engine

[photo © 2002 IEEE]

Babbage

was not deterred, and by then was on to his next brainstorm, which he called the

Analytic Engine. This device, large as a house and powered by 6

steam engines, would be more general purpose in nature because it would be

programmable, thanks to the punched card technology of Jacquard. But it was

Babbage who made an important intellectual leap regarding the punched cards. In

the Jacquard loom, the presence or absence of each hole in the card physically

allows a colored thread to pass or stops that thread (you can see this clearly

in the earlier photo). Babbage saw that the pattern of holes could be used to

represent an abstract idea such as a problem statement or the raw data required

for that problem's solution. Babbage saw that there was no requirement that the

problem matter itself physically pass thru the holes.

Furthermore,

Babbage realized that punched paper could be employed as a storage mechanism,

holding computed numbers for future reference. Because of the connection to the

Jacquard loom, Babbage called the two main parts of his Analytic Engine the

"Store" and the "Mill", as both terms are used in the

weaving industry. The Store was where numbers were held and the Mill was where

they were "woven" into new results. In a modern computer these same

parts are called the memory unit and the central processing

unit (CPU).

The

Analytic Engine also had a key function that distinguishes computers from

calculators: the conditional statement. A conditional statement allows a program

to achieve different results each time it is run. Based on the conditional

statement, the path of the program (that is, what statements are executed next)

can be determined based upon a condition or situation that is detected at the

very moment the program is running.

Babbage

befriended Ada Byron, the daughter of the famous poet Lord Byron (

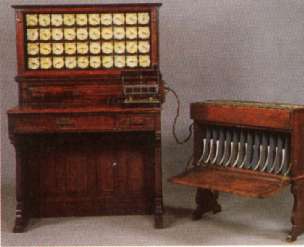

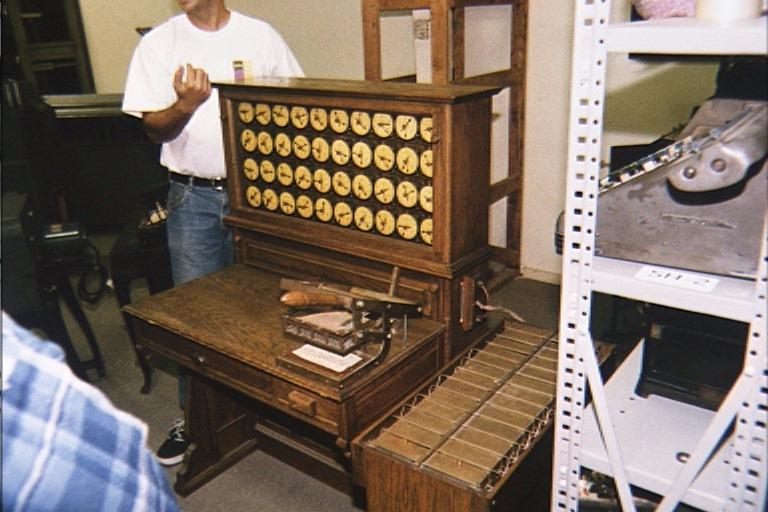

Hollerith's

invention, known as the Hollerith desk, consisted of a card reader

which sensed the holes in the cards, a gear driven mechanism which could count

(using Pascal's mechanism which we still see in car odometers), and a large wall

of dial indicators (a car speedometer is a dial indicator) to display the

results of the count.

An

operator working at a Hollerith Desk like the one below

A

few Hollerith desks still exist today [photo courtesy The

The

patterns on Jacquard's cards were determined when a tapestry was designed and

then were not changed. Today, we would call this a read-only form

of information storage. Hollerith had the insight to convert punched cards to

what is today called a read/write technology. While riding a

train, he observed that the conductor didn't merely punch each ticket, but

rather punched a particular pattern of holes whose positions indicated the

approximate height, weight, eye color, etc. of the ticket owner. This was done

to keep anyone else from picking up a discarded ticket and claiming it was his

own (a train ticket did not lose all value when it was punched because the same

ticket was used for each leg of a trip). Hollerith realized how useful it would

be to punch (write) new cards based upon an analysis (reading) of some other set

of cards. Complicated analyses, too involved to be accomplished during a single

pass thru the cards, could be accomplished via multiple passes thru the cards

using newly printed cards to remember the intermediate results. Unknown to

Hollerith, Babbage had proposed this long before.

Hollerith's

technique was successful and the 1890 census was completed in only 3 years at a

savings of 5 million dollars. Interesting aside: the reason that a person who

removes inappropriate content from a book or movie is called a censor, as is a

person who conducts a census, is that in Roman society the public official

called the "censor" had both of these jobs.

Hollerith

built a company, the Tabulating Machine Company which, after a few buyouts,

eventually became International Business Machines, known today as IBM.

IBM grew rapidly and punched cards became ubiquitous. Your gas bill would arrive

each month with a punch card you had to return with your payment. This punch

card recorded the particulars of your account: your name, address, gas usage,

etc. (I imagine there were some "hackers" in these days who would

alter the punch cards to change their bill). As another example, when you

entered a toll way (a highway that collects a fee from each driver) you were

given a punch card that recorded where you started and then when you exited from

the toll way your fee was computed based upon the miles you drove. When you

voted in an election the ballot you were handed was a punch card. The little

pieces of paper that are punched out of the card are called "chad" and

were thrown as confetti at weddings. Until recently all Social Security and

other checks issued by the Federal government were actually punch cards. The

check-out slip inside a library book was a punch card. Written on all these

cards was a phrase as common as "close cover before striking":

"do not fold, spindle, or mutilate". A spindle was an upright spike on

the desk of an accounting clerk. As he completed processing each receipt he

would impale it on this spike. When the spindle was full, he'd run a piece of

string through the holes, tie up the bundle, and ship it off to the archives.

You occasionally still see spindles at restaurant cash registers.

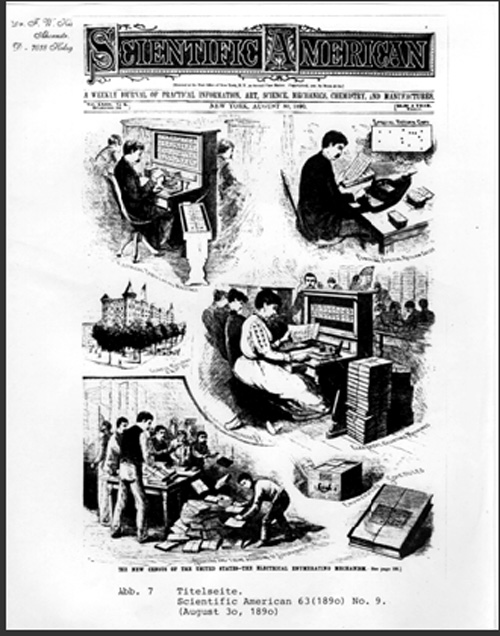

Incidentally,

the Hollerith census machine was the first machine to ever be featured on a

magazine cover.

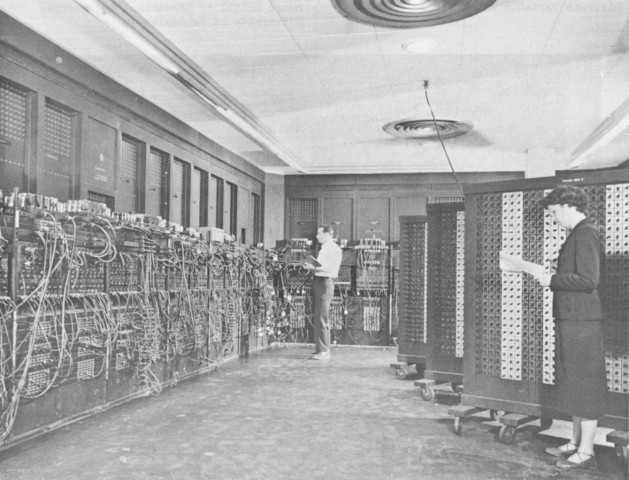

The

title of forefather of today's all-electronic digital computers is usually

awarded to ENIAC, which stood for Electronic Numerical Integrator

and Calculator. ENIAC was built at the

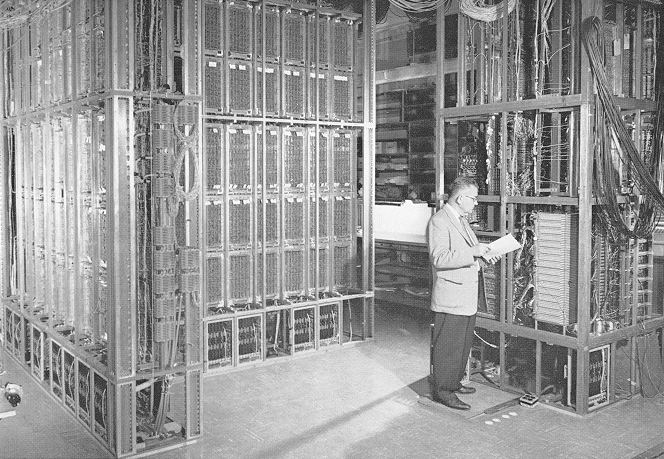

ENIAC

filled a 20 by 40 foot room, weighed 30 tons, and used more than 18,000 vacuum

tubes. Like the Mark I, ENIAC employed paper card readers obtained from IBM

(these were a regular product for IBM, as they were a long established part of

business accounting machines, IBM's forte). When operating, the ENIAC was silent

but you knew it was on as the 18,000 vacuum tubes each generated waste heat like

a light bulb and all this heat (174,000 watts of heat) meant that the computer

could only be operated in a specially designed room with its own heavy duty air

conditioning system. Only the left half of ENIAC is visible in the first

picture, the right half was basically a mirror image of what's visible.

Two

views of ENIAC: the "Electronic Numerical Integrator and Calculator"

(note that it wasn't even given the name of computer since "computers"

were people) [

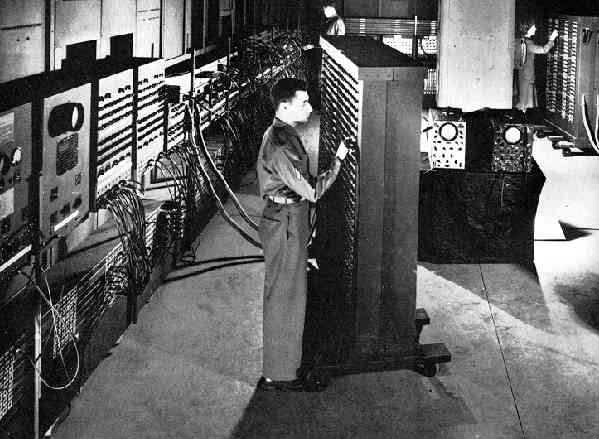

To

reprogram the ENIAC you had to rearrange the patch cords that you can observe on

the left in the prior photo, and the settings of 3000 switches that you can

observe on the right. To program a modern computer, you type out a program with

statements like:

To

perform this computation on ENIAC you had to rearrange a large number of patch

cords and then locate three particular knobs on that vast wall of knobs and set

them to 3, 1, and 4.

Reprogramming

ENIAC involved a hike [

Once

the army agreed to fund ENIAC, Mauchly and Eckert worked around the clock, seven

days a week, hoping to complete the machine in time to contribute to the war.

Their war-time effort was so intense that most days they ate all 3 meals in the

company of the army Captain who was their liaison with their military sponsors.

They were allowed a small staff but soon observed that they could hire only the

most junior members of the

One

of the most obvious problems was that the design would require 18,000 vacuum

tubes to all work simultaneously. Vacuum tubes were so notoriously unreliable

that even twenty years later many neighborhood drug stores provided a "tube

tester" that allowed homeowners to bring in the vacuum tubes from their

television sets and determine which one of the tubes was causing their TV to

fail. And television sets only incorporated about 30 vacuum tubes. The device

that used the largest number of vacuum tubes was an electronic organ: it

incorporated 160 tubes. The idea that 18,000 tubes could function together was

considered so unlikely that the dominant vacuum tube supplier of the day, RCA,

refused to join the project (but did supply tubes in the interest of

"wartime cooperation"). Eckert solved the tube reliability problem

through extremely careful circuit design. He was so thorough that before he

chose the type of wire cabling he would employ in ENIAC he first ran an

experiment where he starved lab rats for a few days and then gave them samples

of all the available types of cable to determine which they least liked to eat.

Here's a look at a small number of the vacuum tubes in ENIAC:

Even

with 18,000 vacuum tubes, ENIAC could only hold 20 numbers at a time. However,

thanks to the elimination of moving parts it ran much faster than the Mark I: a

multiplication that required 6 seconds on the Mark I could be performed on ENIAC

in 2.8 thousandths of a second. ENIAC's basic clock speed was 100,000 cycles per

second. Today's home computers employ clock speeds of 1,000,000,000 cycles per

second. Built with $500,000 from the U.S. Army, ENIAC's first task was to

compute whether or not it was possible to build a hydrogen bomb (the atomic bomb

was completed during the war and hence is older than ENIAC). The very first

problem run on ENIAC required only 20 seconds and was checked against an answer

obtained after forty hours of work with a mechanical calculator. After chewing

on half a million punch cards for six weeks, ENIAC did humanity no favor when it

declared the hydrogen bomb feasible. This first ENIAC program remains classified

even today.

Once

ENIAC was finished and proved worthy of the cost of its development, its

designers set about to eliminate the obnoxious fact that reprogramming the

computer required a physical modification of all the patch cords and switches.

It took days to change ENIAC's program. Eckert and Mauchly's next teamed up with

the mathematician John von Neumann to design EDVAC,

which pioneered the stored program. Because he was the first to

publish a description of this new computer, von Neumann is often wrongly

credited with the realization that the program (that is, the sequence of

computation steps) could be represented electronically just as the data was. But

this major breakthrough can be found in Eckert's notes long before he ever

started working with von Neumann. Eckert was no slouch: while in high school

Eckert had scored the second highest math SAT score in the entire country.

After

ENIAC and EDVAC came other computers with humorous names such as ILLIAC,

JOHNNIAC, and, of course, MANIAC. ILLIAC was built at the University of Illinois

at Champaign-Urbana, which is probably why the science fiction author Arthur C.

Clarke chose to have the HAL computer of his famous book "2001: A Space

Odyssey" born at Champaign-Urbana. Have you ever noticed that you can shift

each of the letters of IBM backward by one alphabet position and get HAL?

ILLIAC

II built at the

HAL

from the movie "2001: A Space Odyssey". Look at the previous picture

to understand why the movie makers in 1968 assumed computers of the future would

be things you walk into.

JOHNNIAC

was a reference to John von Neumann, who was unquestionably a genius. At age 6

he could tell jokes in classical Greek. By 8 he was doing calculus. He could

recite books he had read years earlier word for word. He could read a page of

the phone directory and then recite it backwards. On one occasion it took von

Neumann only 6 minutes to solve a problem in his head that another professor had

spent hours on using a mechanical calculator. Von Neumann is perhaps most famous

(infamous?) as the man who worked out the complicated method needed to detonate

an atomic bomb.

Once

the computer's program was represented electronically, modifications to that

program could happen as fast as the computer could compute. In fact, computer

programs could now modify themselves while they ran (such programs are called

self-modifying programs). This introduced a new way for a program to fail:

faulty logic in the program could cause it to damage itself. This is one source

of the general protection fault famous in MS-DOS and the blue

screen of death famous in Windows.

Today,

one of the most notable characteristics of a computer is the fact that its

ability to be reprogrammed allows it to contribute to a wide

variety of endeavors, such as the following completely unrelated fields:

| the creation of

special effects for movies, | |

| the compression of

music to allow more minutes of music to fit within the limited memory of an

MP3 player, | |

| the observation of

car tire rotation to detect and prevent skids in an anti-lock braking system

(ABS), | |

| the analysis of the

writing style in Shakespeare's work with the goal of proving whether a

single individual really was responsible for all these pieces. |

By

the end of the 1950's computers were no longer one-of-a-kind hand built devices

owned only by universities and government research labs. Eckert and Mauchly left

the

A

reel-to-reel tape drive [photo courtesy of The

ENIAC

was unquestionably the origin of the

If

you learned computer programming in the 1970's, you dealt with what today are

called mainframe computers, such as the IBM 7090 (shown below),

IBM 360, or IBM 370.

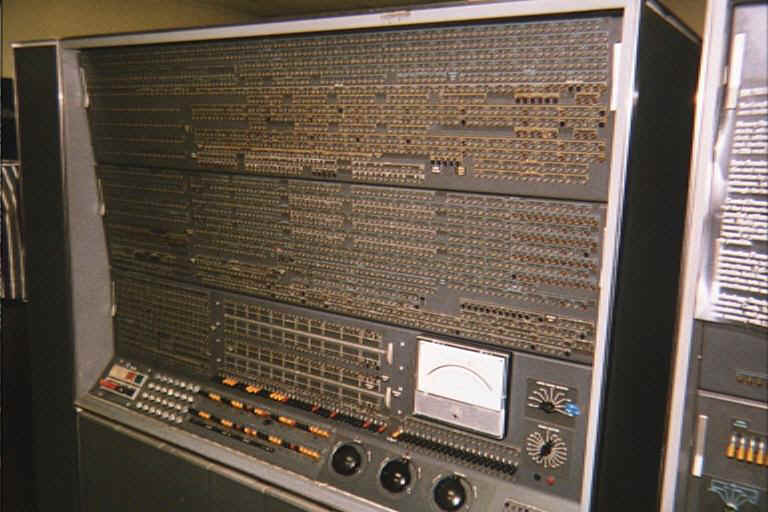

The

IBM 7094, a typical mainframe computer [photo courtesy of IBM]

There

were 2 ways to interact with a mainframe. The first was called time

sharing because the computer gave each user a tiny sliver of time in a

round-robin fashion. Perhaps 100 users would be simultaneously logged on, each

typing on a teletype such as the following:

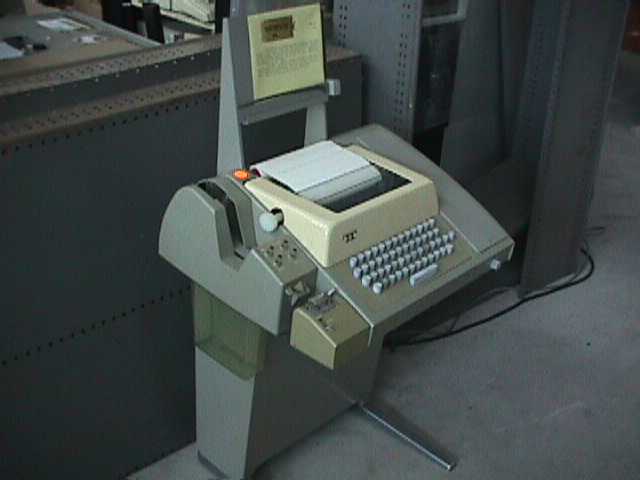

The

Teletype was the standard mechanism used to interact with a time-sharing

computer

A

teletype was a motorized typewriter that could transmit your keystrokes to the

mainframe and then print the computer's response on its roll of paper. You typed

a single line of text, hit the carriage return button, and waited for the

teletype to begin noisily printing the computer's response (at a whopping 10

characters per second). On the left-hand side of the teletype in the prior

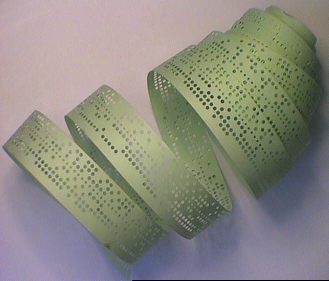

picture you can observe a paper tape reader and writer (i.e., puncher). Here's a

close-up of paper tape:

Three

views of paper tape

After

observing the holes in paper tape it is perhaps obvious why all computers use

binary numbers to represent data: a binary bit (that is, one digit of a binary

number) can only have the value of 0 or 1 (just as a decimal digit can only have

the value of 0 thru 9). Something which can only take two states is very easy to

manufacture, control, and sense. In the case of paper tape, the hole has either

been punched or it has not. Electro-mechanical computers such as the Mark I used

relays to represent data because a relay (which is just a motor driven switch)

can only be open or closed. The earliest all-electronic computers used vacuum

tubes as switches: they too were either open or closed. Transistors replaced

vacuum tubes because they too could act as switches but were smaller, cheaper,

and consumed less power.

Paper

tape has a long history as well. It was first used as an information storage

medium by Sir Charles Wheatstone, who used it to store Morse code that was

arriving via the newly invented telegraph (incidentally, Wheatstone was also the

inventor of the accordion).

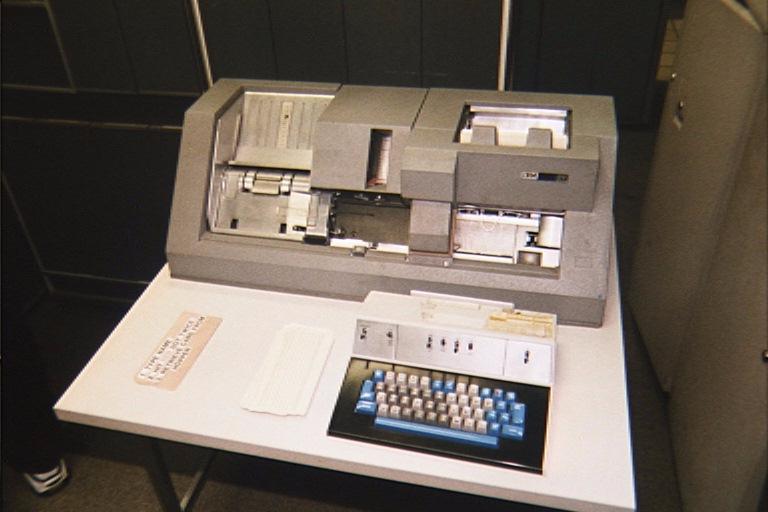

The

alternative to time sharing was batch mode processing, where the

computer gives its full attention to your program. In exchange for getting the

computer's full attention at run-time, you had to agree to prepare your program

off-line on a key punch machine which generated punch cards.

An

IBM Key Punch machine which operates like a typewriter except it produces

punched cards rather than a printed sheet of paper

University

students in the 1970's bought blank cards a linear foot at a time from the

university bookstore. Each card could hold only 1 program statement. To submit

your program to the mainframe, you placed your stack of cards in the hopper of a

card reader. Your program would be run whenever the computer made it that far.

You often submitted your deck and then went to dinner or to bed and came back

later hoping to see a successful printout showing your results. Obviously, a

program run in batch mode could not be interactive.

But

things changed fast. By the 1990's a university student would typically own his

own computer and have exclusive use of it in his dorm room.

The

original IBM Personal Computer (PC)

This

transformation was a result of the invention of the microprocessor.

A microprocessor (uP) is a computer that is fabricated on an integrated circuit

(IC). Computers had been around for 20 years before the first microprocessor was

developed at Intel in 1971. The micro in the name microprocessor

refers to the physical size. Intel didn't invent the electronic computer. But

they were the first to succeed in cramming an entire computer on a single chip

(IC). Intel was started in 1968 and initially produced only semiconductor memory

(Intel invented both the DRAM and the EPROM, two memory technologies that are

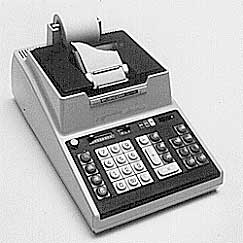

still going strong today). In 1969 they were approached by Busicom, a Japanese

manufacturer of high performance calculators (these were typewriter sized units,

the first shirt-pocket sized scientific calculator was the Hewlett-Packard HP35

introduced in 1972). Busicom wanted Intel to produce 12 custom calculator chips:

one chip dedicated to the keyboard, another chip dedicated to the display,

another for the printer, etc. But integrated circuits were (and are) expensive

to design and this approach would have required Busicom to bear the full expense

of developing 12 new chips since these 12 chips would only be of use to them.

A

typical Busicom desk calculator

But

a new Intel employee (Ted Hoff) convinced Busicom to instead accept a general

purpose computer chip which, like all computers, could be reprogrammed for many

different tasks (like controlling a keyboard, a display, a printer, etc.). Intel

argued that since the chip could be reprogrammed for alternative purposes, the

cost of developing it could be spread out over more users and hence would be

less expensive to each user. The general purpose computer is adapted to each new

purpose by writing a program which is a sequence of instructions

stored in memory (which happened to be Intel's forte). Busicom agreed to pay

Intel to design a general purpose chip and to get a price break since it would

allow Intel to sell the resulting chip to others. But development of the chip

took longer than expected and Busicom pulled out of the project. Intel knew it

had a winner by that point and gladly refunded all of Busicom's investment just

to gain sole rights to the device which they finished on their own.

Thus

became the Intel 4004, the first microprocessor (uP). The 4004 consisted of 2300

transistors and was clocked at 108 kHz (i.e., 108,000 times per second). Compare

this to the 42 million transistors and the 2 GHz clock rate (i.e., 2,000,000,000

times per second) used in a Pentium 4. One of Intel's 4004 chips still functions

aboard the Pioneer 10 spacecraft, which is now the man-made object farthest from

the earth. Curiously, Busicom went bankrupt and never ended up using the

ground-breaking microprocessor.

Intel

followed the 4004 with the 8008 and 8080. Intel priced the 8080 microprocessor

at $360 dollars as an insult to IBM's famous 360 mainframe which cost millions

of dollars. The 8080 was employed in the MITS Altair computer,

which was the world's first personal computer (PC). It was

personal all right: you had to build it yourself from a kit of parts that

arrived in the mail. This kit didn't even include an enclosure and that is the

reason the unit shown below doesn't match the picture on the magazine cover.

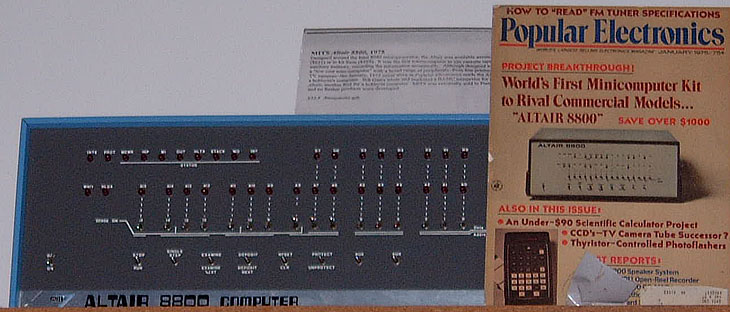

The

Altair 8800, the first PC

A

Harvard freshman by the name of Bill Gates decided to drop out of

college so he could concentrate all his time writing programs for this computer.

This early experienced put Bill Gates in the right place at the right time once

IBM decided to standardize on the Intel microprocessors for their line of PCs in

1981. The Intel Pentium 4 used in today's PCs is still compatible with the Intel

8088 used in IBM's first PC.

If

you've enjoyed this history of computers, I encourage you to try your own hand

at programming a computer. That is the only way you will really come to

understand the concepts of looping, subroutines, high and low-level languages,

bits and bytes, etc. I have written a number of Windows programs which teach

computer programming in a fun, visually-engaging setting. I start my students on

a programmable RPN calculator where we learn about programs, statements, program

and data memory, subroutines, logic and syntax errors, stacks, etc. Then we move

on to an 8051 microprocessor (which happens to be the most widespread

microprocessor on earth) where we learn about microprocessors, bits and bytes,

assembly language, addressing modes, etc. Finally, we graduate to the most

powerful language in use today: C++ (pronounced "C plus plus"). These

Windows programs are accompanied by a book's worth of on-line documentation

which serves as a self-study guide, allowing you to teach yourself computer

programming! The home page (URL) for this collection of software is

IBM

continued to develop mechanical calculators for sale to businesses to help with

financial accounting and inventory accounting. One characteristic of both

financial accounting and inventory accounting is that although you need to

subtract, you don't need negative numbers and you really don't have to multiply

since multiplication can be accomplished via repeated addition.

One

early success was the Harvard Mark I computer which was built as a

partnership between Harvard and IBM in 1944. This was the first programmable

digital computer made in the

The

Harvard Mark I: an electro-mechanical computer

You

can see the 50 ft rotating shaft in the bottom of the prior photo. This shaft

was a central power source for the entire machine. This design feature was

reminiscent of the days when waterpower was used to run a machine shop and each

lathe or other tool was driven by a belt connected to a single overhead shaft

which was turned by an outside waterwheel.

A

central shaft driven by an outside waterwheel and connected to each machine by

overhead belts was the customary power source for all the machines in a factory

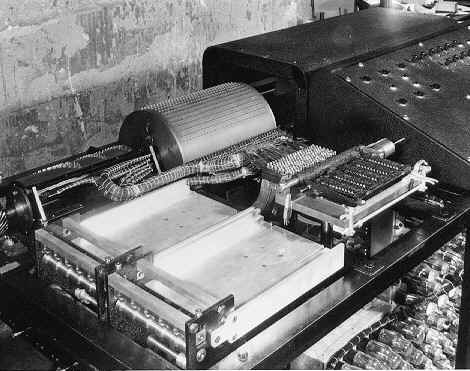

Here's

a close-up of one of the Mark I's four paper tape readers. A paper tape was an

improvement over a box of punched cards as anyone who has ever dropped -- and

thus shuffled -- his "stack" knows.

One

of the four paper tape readers on the Harvard Mark I (you can observe the

punched paper roll emerging from the bottom)

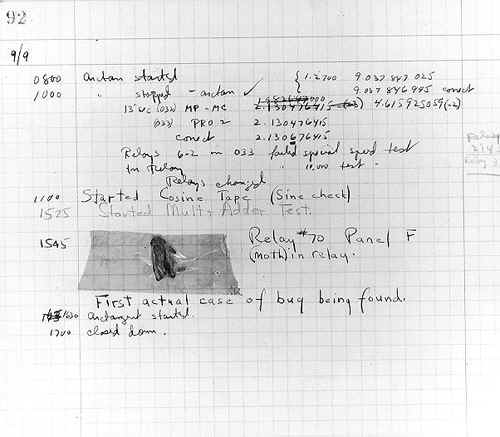

One

of the primary programmers for the Mark I was a woman, Grace Hopper.

Hopper found the first computer "bug": a dead moth that had gotten

into the Mark I and whose wings were blocking the reading of the holes in the

paper tape. The word "bug" had been used to describe a defect since at

least 1889 but Hopper is credited with coining the word "debugging" to

describe the work to eliminate program faults.

The

first computer bug [photo © 2002 IEEE]

In

1953 Grace Hopper invented the first high-level language, "Flow-matic".

This language eventually became COBOL which was the language most affected by

the infamous Y2K problem. A high-level language is designed to be more

understandable by humans than is the binary language understood by the computing

machinery. A high-level language is worthless without a program -- known as a compiler

-- to translate it into the binary language of the computer and hence Grace

Hopper also constructed the world's first compiler. Grace remained active as a

Rear Admiral in the Navy Reserves until she was 79 (another record).

The

Mark I operated on numbers that were 23 digits wide. It could add or subtract

two of these numbers in three-tenths of a second, multiply them in four seconds,

and divide them in ten seconds. Forty-five years later computers could perform

an addition in a billionth of a second! Even though the Mark I had three

quarters of a million components, it could only store 72 numbers! Today, home

computers can store 30 million numbers in RAM and another 10 billion numbers on

their hard disk. Today, a number can be pulled from RAM after a delay of only a

few billionths of a second, and from a hard disk after a delay of only a few

thousandths of a second. This kind of speed is obviously impossible for a

machine which must move a rotating shaft and that is why electronic computers

killed off their mechanical predecessors.

On

a humorous note, the principal designer of the Mark I, Howard Aiken

of Harvard, estimated in 1947 that six electronic digital computers would be

sufficient to satisfy the computing needs of the entire

(that's

just the operator's console, here's the rest of its 33 foot length:)

to

be bested by a home computer of 1976 such as this Apple I which

sold for only $600:

The

Apple 1 which was sold as a do-it-yourself kit (without the lovely case seen

here)

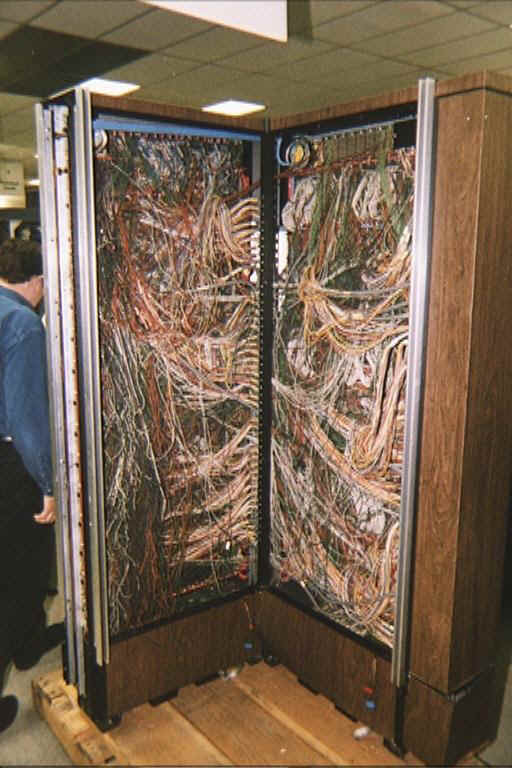

Computers

had been incredibly expensive because they required so much hand assembly, such

as the wiring seen in this CDC 7600:

Typical

wiring in an early mainframe computer [photo courtesy The

The

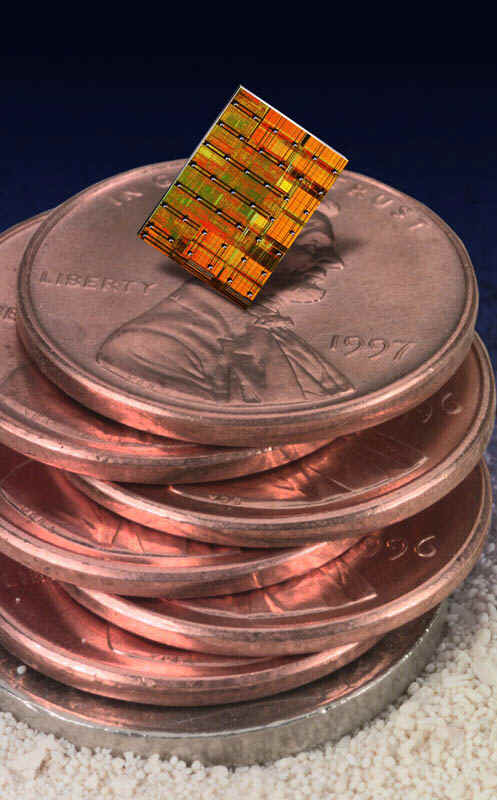

microelectronics revolution is what allowed the amount of

hand-crafted wiring seen in the prior photo to be mass-produced as an integrated

circuit which is a small sliver of silicon the size of your thumbnail .

An

integrated circuit ("silicon chip") [photo courtesy of IBM]

The

primary advantage of an integrated circuit is not that the transistors

(switches) are miniscule (that's the secondary advantage), but rather that

millions of transistors can be created and interconnected in a mass-production

process. All the elements on the integrated circuit are fabricated

simultaneously via a small number (maybe 12) of optical masks that define the

geometry of each layer. This speeds up the process of fabricating the computer

-- and hence reduces its cost -- just as Gutenberg's printing press sped up the

fabrication of books and thereby made them affordable to all.

The

IBM Stretch computer of 1959 needed its 33 foot length to hold the 150,000

transistors it contained. These transistors were tremendously smaller than the

vacuum tubes they replaced, but they were still individual elements requiring

individual assembly. By the early 1980s this many transistors could be

simultaneously fabricated on an integrated circuit. Today's Pentium 4

microprocessor contains 42,000,000 transistors in this same thumbnail sized

piece of silicon.

It's

humorous to remember that in between the Stretch machine (which would be called

a mainframe today) and the Apple I (a desktop computer)

there was an entire industry segment referred to as mini-computers

such as the following PDP-12 computer of 1969:

The

DEC PDP-12

Sure

looks "mini", huh? But we're getting ahead of our story.

One

of the earliest attempts to build an all-electronic (that is, no gears, cams,

belts, shafts, etc.) digital computer occurred in 1937 by J. V. Atanasoff,

a professor of physics and mathematics at Iowa State University. By 1941 he and

his graduate student, Clifford Berry, had succeeded in building a machine that

could solve 29 simultaneous equations with 29 unknowns. This machine was the

first to store data as a charge on a capacitor, which is how today's computers

store information in their main memory (DRAM or dynamic RAM).

As far as its inventors were aware, it was also the first to employ binary

arithmetic. However, the machine was not programmable, it lacked a conditional

branch, its design was appropriate for only one type of mathematical problem,

and it was not further pursued after World War II. It's inventors didn't even

bother to preserve the machine and it was dismantled by those who moved into the

room where it lay abandoned.

The

Atanasoff-Berry Computer [photo © 2002 IEEE]

Another

candidate for granddaddy of the modern computer was Colossus,

built during World War II by

Two

views of the code-breaking Colossus of

The

Harvard Mark I, the Atanasoff-Berry computer, and the British Colossus all made

important contributions. American and British computer pioneers were still

arguing over who was first to do what, when in 1965 the work of the German Konrad

Zuse was published for the first time in English. Scooped! Zuse had

built a sequence of general purpose computers in Nazi Germany. The first, the Z1,

was built between 1936 and 1938 in the parlor of his parent's home.

The

Zuse Z1 in its residential setting

Zuse's

third machine, the Z3, built in 1941, was probably the first

operational, general-purpose, programmable (that is, software controlled)

digital computer. Without knowledge of any calculating machine inventors since

Leibniz (who lived in the 1600's), Zuse reinvented Babbage's concept of

programming and decided on his own to employ binary representation for numbers (Babbage

had advocated decimal). The Z3 was destroyed by an Allied bombing raid. The Z1

and Z2 met the same fate and the Z4 survived only because Zuse hauled it in a

wagon up into the mountains. Zuse's accomplishments are all the more incredible

given the context of the material and manpower shortages in